A containerized application can be deployed, scaled, and managed with Kubernetes (k8s), an excellent open-source automating system. It is a portable and extensible platform that facilitates both declarative and automated containerized workflows.

Although containers are similar to virtual machines they are considered lightweight due to their isolation, and properties to share OS between applications. Containers also have their file system, the share of memory, CPU, process space, etc., making them a great way to package the code and run the application. Moreover, these containers are easily portable across the cloud because of their underlying infrastructure.

With the expanding adoption of containers, Kubernetes have become the standard to deploy, maintain, and operate containerized applications. But, sometimes achieving a frictionless Kubernetes development workflow can be painful.

Read: Kubernetes vs Docker Swarm: A Container Orchestration Tools Comparison

So in this blog, we will look at the problems in creating a successful Kubernetes Workflow and the ways to solve them.

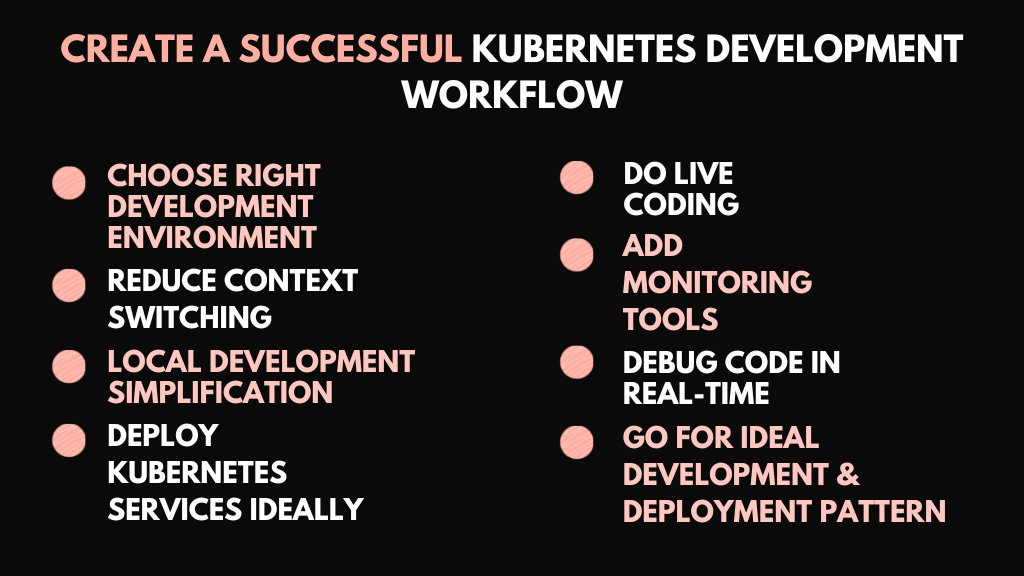

Ways to Create Powerful Kubernetes Development Workflow

Starting a project and deploying it on Kubernetes can be time-consuming. You can easily get caught up in infrastructure configurations instead of writing the business logic. Tools and practices that can help a developer focus on the code while streamlining the Kubernetes workflow are the keys to enhancing productivity.

-

Choose the right Development Environment for Kubernetes

There are a vast number of Integrated Development Environments (IDE) to choose from. But as a developer, you might have spent most of your time developing in your favorite IDE.

But when it comes to Kubernetes, you must make sure that the IDE should have a containerized build/runtime environment that allows services to always be built and run which will simplify the onboarding process for new developers and ensure environmental parity across different production and development environments.

Read: Top Best Web Development IDE in 2020

However, if you still want to use the IDE you are used to then try adding extensions that might make the developing, deploying, managing, and scaling infrastructure of Kubernetes easier. Some of the IDEs that you can use are Cloud Code, CodeStream, Azure DevOps, Spring Tools 4, etc. Which will work like a charm with IntelliJ and Visual Studio Code.

In the case of local development workflow, you will also have to install git (for source control), Docker (to build & run containers), kubectl (for managing deployment), Telepresence (for locally developing service), and Forge (to deploy services into Kubernetes).

Read: What does DevOps actually do?

In case, you don’t want to work using local resources, you can go for a browser-based development environment like Cloud Shell editor from Azure or Google, Hubot, Drools, Teradata ViewPoint, iBwave Design, etc. Especially with tools such as kubectl, scaffold, and docker, these tools reduce setup time and also make it easier for developers to manage Kubernetes infrastructure directly from within the IDE.

Read: How to Build a Java Application in Visual Studio Code

-

Reduce Context Switching

Context switching involves storing the state or context of a process to restore and resume its execution when required. This allows multiple processors to share a single CPU and is a crucial feature of multitasking systems. However, context switching can be time-consuming and create friction in the workflow.

At the time of Kubernetes development, you might have to switch between documentation, Cloud Console, IDE, and logs. Hence, choosing the web-based development environment or adding an extension (with built-in features) to your local one can help minimize context switching now and then.

-

Local Development Simplification

Usually, using extensions in IDE makes the development and feedback process on local machines streamlined and easier.

And like all the developers out there, you must want to focus on business logic instead of running it in a container and Buildpacks can help you in achieving that. Open-source, cloud-native Buildpacks make it easier and faster to create production-ready and secured images of the container from source code with no need for writing and maintaining a Dockerfile.

Read: How Docker Container Works

Moreover, Buildpacks can work with Code Stream, Cloud Code, and other browser-based development environments which also allows remote clusters to offload memory resources and CPU from your machine. It allows developers to easily build containers and deploy them to MiniKube or Docker Desktop.

Besides, Minikube gives a platform to run and experiment with the Kubernetes application, by creating a cluster on your local machine.

With an online development environment, the repetitive tasks of building container images, updating manifests of Kubernetes, and redeploying applications are simplified. These web-based IDEs use Skaffold (a command-line tool for continuous development of apps on K8s) to automatically run all the iterative processes every time the code is changed.

Read: Running Stateful Applications on Kubernetes

Another thing to notice here is that Skaffold’s watch mode overviews the changes in local source code and then rebuilds/redeploys the app to the K8s cluster in real-time. The latest version of Skaffold offers the File Sync feature skips rebuilding and redeploying and allows developers to see code changes in seconds.

-

Opt for the Suitable Way to Deploy Kubernetes Services

Imperative and declarative are two ways a developer can deploy Kubernetes services. Here imperative refers to using a command-line interface for deployment creation and declarative means describing the desired deployment state in a YAML file.

Although the imperative method can be initially faster, it becomes hard to see the changes while managing deployments.

On the other hand, declarative deployments are self-documenting. This means that every configuration file can be managed in Git/GitHub, allowing many developers to work on the same deployments with clear details of what was changed by whom.

Moreover, declarative deployments allow one to use principles of GitOps, where every Git configuration is used as an authenticated truth source.

Undoubtedly, opting for the declarative deployment of Kubernetes services will be more beneficial than going for the imperative one.

-

Do Live Coding

Who doesn’t want a quick feedback cycle while developing a service? Everyone wants to immediately build and test the code after making any changes. However, the deployment process that we just talked about can add latency to the process, because developing and deploying containers with the latest changes can be time-consuming.

Read: Observability for Monitoring, Alerting & Tracing Lineage in Microservices

Technologies like Telepresence, DevSpace, Gefyra, and Docker Compose UI enable you to locally develop a service with a bi-directional proxy to a remote cluster of Kubernetes.

-

Add Monitoring Tools

Monitoring is one of the biggest challenges when it comes to Kubernetes adoption. After all, monitoring a distributed application environment has never been easy and Kubernetes has added additional complexities as well.

But to help you overcome the issue, several monitoring tools can be added to the Kubernetes ecosystem. Some of the most used open-source tools are Prometheus, The ELK Stack, Grafana, Fluent Bit, kubewatch, cAdvisor, kube-state-metrics, Jaeger, kube-ops-view, etc.

-

Debug Code in Real-Time

Debugging applications built and running on clusters of Kubernetes isn’t easy. However, to solve the problem, there are numerous methods in the market to help in debugging the running pods. However, replicating the local debugging experience in the IDE is difficult due to the need for port forwarding and exposing the debugging ports.

But tools like Skaffold, Helm, Sonarlint, etc, can make the experience of debugging smooth as the web-based IDEs can easily leverage them and place breakpoints in the code.

Read: E-Learning Web App Development Cost

If you debug the Kubernetes cluster locally, discovering runtime errors before they make it to the integration, staging, or production becomes easier. Moreover, the faster you will identify the errors and bugs, the earlier they will be resolved, speeding up the time of development.

-

Go for Ideal Development & Deployment Pattern

Another important thing to note if you want to create a powerful and successful Kubernetes development workflow is choosing the right development and deployment pattern that is highly suitable for the project you are working on.

The patterns for development and deployment of applications in K8s are:

-

Foundational patterns enfold the root Kubernetes concepts. In building container-based cloud-native applications, these patterns act as the basis.

-

Behavioral patterns cover the foundational patterns and indulge granularity in the concepts of managing different container and platform interaction types.

-

Structural patterns include details on managing containers in a Kubernetes pod.

Read: Design Patterns in Java

-

One can use Configuration patterns to understand the way to handle application configuration in Kubernetes. It includes the detailed steps for connecting applications to configurations.

-

Advanced patterns refer to the advanced concepts of Kubernetes. Some of the concepts entailed in this pattern are ways to extend the Kubernetes platform or build container images within the cluster.

Conclusion

So that was all about making your Kubernetes development workflow powerful and frictionless. We hope that this will help you in working with Kubernetes and microservices in your future projects.

Read: Why Microservices Architecture

On the contrary, if you are a business owner who wants to build a microservices application, and want to hire developers from an authenticated, trustworthy, and experienced company, then get in touch with us now!